Hands-On with Supervised Learning

A Step-by-Step Guide to Building Models using scikit-learn

Introduction

Objective of This Week's Blog Post

In the last two weeks, we've delved deep into the theoretical aspects of supervised learning. We've explored what supervised learning is, its various types, and the metrics to evaluate models. We also looked into its applications across different domains, its advantages, disadvantages, and what the future holds. While understanding the theory is crucial, the true power of machine learning is unleashed when you get your hands dirty with actual code. Therefore, this week, we're shifting gears to walk you through the practical implementation of supervised learning algorithms.

Brief Overview of scikit-learn

Scikit-learn is an open-source machine learning library for Python. It provides simple and efficient tools for data analysis and modeling, making it accessible for everyone—from novices to experts. With just a few lines of code, scikit-learn allows us to perform tasks ranging from basic data preprocessing to complex algorithms. In essence, it acts as a powerful toolset that helps bring our machine learning models from concept to execution.

By the end of this blog post, you will have a deeper understanding of how to:

Set up your coding environment for machine learning

Preprocess your data for optimal performance

Implement various supervised learning algorithms

Evaluate your models using scikit-learn’s built-in functionalities

Let’s get started!

Setting Up the Environment

Introduction

In this section, we will focus on setting up your machine to run Python code and use scikit-learn for supervised learning. We'll also cover how to install essential Python libraries that you'll need for data manipulation and visualization. Feel free to skip this section if you already have Python installed.

Installing Python

If you haven't installed Python yet, you can download the latest version from the official website. Follow the installation steps for your specific operating system. To check if Python is installed, open a command prompt or terminal and run:

python --versionUsing Jupyter Notebook for Easier Workflow

While you can run Python code using a simple text editor and a terminal, using a Jupyter Notebook can significantly streamline your workflow. Jupyter Notebooks allow you to write and run Python code, add Markdown text, and include equations, charts, and plots, all in an interactive environment.

Setting up a Virtual Environment (Optional)

A virtual environment is a self-contained directory that contains a Python interpreter and any additional libraries required for a specific project. This is optional but highly recommended as it helps you manage dependencies and avoid conflicts. To set up a virtual environment:

Open your terminal and navigate to your project directory.

Run the following command to create a virtual environment:

python -m venv myenvActivate the virtual environment:

On Windows:

myenv\Scripts\activateOn macOS and Linux:

source myenv/bin/activate

Installing Essential Libraries

Once you've activated your virtual environment, install the following libraries:

NumPy: For numerical computations.

Pandas: For data manipulation and analysis.

Matplotlib: For data visualization.

Scikit-Learn: For implementing machine learning algorithms.

To install these packages, run the following command in your terminal:

pip install numpy pandas matplotlib scikit-learnVerifying Installation

After installing the libraries, it's good to verify that everything has been set up correctly. Run the following Python code snippet:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn import datasets

# Load iris dataset

iris = datasets.load_iris()

print("Iris data shape:", iris.data.shape)

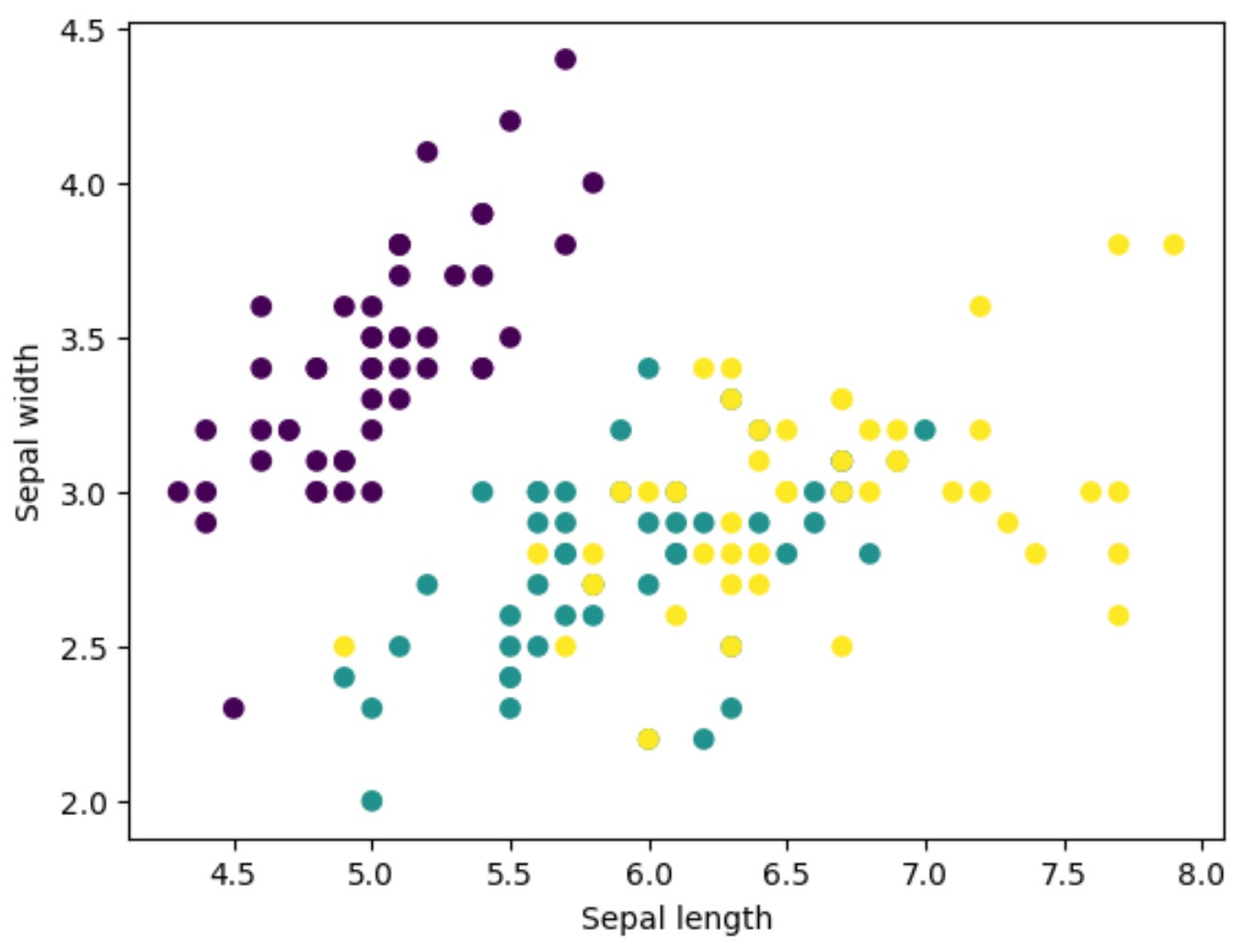

# Plot data

plt.scatter(iris.data[:, 0], iris.data[:, 1], c=iris.target)

plt.xlabel('Sepal length')

plt.ylabel('Sepal width')

plt.show()If the code runs successfully and you can see the scatter plot of the Iris dataset, congratulations! You have successfully set up your environment.

Setting up a proper environment is crucial for a smooth workflow in any data science or machine learning project. You're now ready to dive into implementing and evaluating machine learning algorithms with scikit-learn.

Data Preprocessing

Introduction to Data Preprocessing

In the machine learning pipeline, one step that often gets less attention but holds significant importance is data preprocessing. Before feeding your data into a supervised learning algorithm, it's crucial to prepare and clean the dataset to achieve optimal performance. Data preprocessing is a collection of techniques applied to transform raw data into a format that is more suitable for modeling. In this section, we'll explore key elements of data preprocessing, such as importing data, cleaning it, and selecting and scaling features.

Disclaimer

Please note that each of the sections covered in this Data Preprocessing overview could be expanded into a week or two-long post on its own. We're covering these topics at a high level for now, with plans to delve deeper into each in future posts.

Importing Data

The first step in data preprocessing is to import your dataset. Data can come in various formats like CSV, Excel, JSON, or from a SQL database. Python provides multiple libraries to help with this, such as pandas, which can easily read data from different file formats into a DataFrame—a two-dimensional table that is easy to manipulate.

import pandas as pd

# Reading a CSV file into a DataFrame

df = pd.read_csv('data.csv')After importing the data, it's always a good idea to perform some basic exploratory data analysis (EDA) to understand your dataset's structure, missing values, and basic statistics.

Data Cleaning

Once the data is imported, the next step is cleaning it to ensure that it's of the highest quality. This involves handling missing values, removing outliers, and dealing with duplicate entries. Missing values can be treated in several ways: you can remove those entries, fill them in with a specific value (like a mean or median), or use techniques like regression, model-based imputation, or even deep learning to predict the missing value. The choice largely depends on the nature and amount of the missing data.

# Filling missing values with mean

df.fillna(df.mean(), inplace=True)Feature Selection

Not all features (columns) in your dataset will be relevant for making predictions. Some might be redundant or irrelevant, and can thus be removed. Feature selection techniques can range from simple methods like removing features with low variance or high correlation, to more complex ones like backward elimination, forward selection, and even using machine learning algorithms like Random Forest to identify important features.

# Dropping a redundant feature

df.drop('redundant_feature', axis=1, inplace=True)Feature Scaling

Machine learning algorithms perform better when numerical input variables are scaled to a standard range. This is especially true for algorithms that use distance measures, like k-NN, or gradient descent-based optimization, like neural networks. There are various ways to scale your data, such as Min-Max scaling, Standard scaling (Z-score normalization), and Robust scaling. Scikit-learn provides easy-to-use methods for all these.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

df[['feature1', 'feature2']] = scaler.fit_transform(df[['feature1', 'feature2']])Data preprocessing is a vital step in the supervised learning process. Properly cleaned and prepared data not only makes the training process faster but also results in more accurate models. As we have seen, Python libraries like pandas and scikit-learn offer a wide array of functions to make data preprocessing a straightforward task. In the next section, we'll build on this clean, preprocessed data to create training and test sets for our machine learning models.

Creating Training and Test Sets

One of the foundational steps in any machine learning project is the separation of your dataset into training and test sets. The reason for this separation is straightforward yet critical: we need a way to evaluate how well our machine learning model is likely to perform on unseen data. The training set is used to teach the model, while the test set is used to evaluate its generalization ability. In this section, we'll delve into the methods and best practices for creating these subsets of your dataset.

Why Random Splitting?

The most common approach to create training and test sets is random splitting. The data is shuffled and then divided into two separate sets. The rationale behind random splitting is to ensure that each subset is a good representation of the overall data. This strategy helps to mitigate the risk of our evaluation metrics being overly optimistic or pessimistic. Randomness ensures that the peculiarities of the training set are less likely to be memorized by the model, which could result in poor generalization to new data.

The Golden Ratio: 70-30, 80-20, or Something Else?

How much data should you allocate to the training set versus the test set? The ratio is often dictated by the size and nature of your dataset. A common practice is to allocate 70% to 80% of the data for training and the remaining 20% to 30% for testing. However, these numbers aren't set in stone. If you have a very large dataset, even a 10% holdout for the test set might be sufficient. The key is to ensure that your test set is large enough to provide a statistically meaningful evaluation.

Stratified Sampling

In certain cases, especially when dealing with imbalanced classes, random sampling can lead to a test set that is not representative of the overall class distribution. This is where stratified sampling comes into play. Stratified sampling ensures that the class distribution in the test set is similar to that in the entire dataset, thereby making the evaluation metrics more reliable. For example, if 20% of your entire dataset belongs to Class A, then stratified sampling ensures that approximately 20% of your test set will also belong to Class A.

Using scikit-learn's train_test_split

For those looking to implement this in Python, the train_test_split function from scikit-learn is an excellent tool. It takes care of shuffling the data and splitting it into training and test sets, and it even allows for stratified sampling. Here's a simple example:

from sklearn.model_selection import train_test_split

# X and y are your features and labels

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, stratify=y)The test_size parameter specifies the proportion of the test set, and random_state ensures reproducibility. The stratify parameter will make sure that the class distribution in the training and test sets is similar, provided you pass the target labels to it.

Properly creating training and test sets is an essential step in the machine learning pipeline. While the process can be straightforward, paying attention to details like stratified sampling can significantly impact the reliability of your model evaluation. Whether you're a beginner or an experienced data scientist, adhering to these best practices ensures that you're setting your model up for success.

Implementing Algorithms

Linear Regression with Code Examples

Linear Regression is one of the most straightforward yet powerful algorithms in the supervised learning domain. It is primarily used for regression tasks where the objective is to predict a continuous output variable based on one or more input features. To demonstrate how to implement linear regression in Python, we'll use the scikit-learn library, which offers an easy-to-use interface for a wide array of machine learning algorithms.

To begin, make sure you've installed scikit-learn; if not, you can do so by running pip install scikit-learn. Now, let's import the required modules:

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

import numpy as npNext, you'll need a dataset. For demonstration purposes, let's consider a simple synthetic dataset:

# Generate synthetic data

X = 2 * np.random.rand(100, 1)

y = 3 + 4 * X + np.random.randn(100, 1)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)Once you have your data prepared, implementing the linear regression model is as straightforward as the following lines of code:

# Create a Linear Regression model and fit it to the training data

lin_reg = LinearRegression()

lin_reg.fit(X_train, y_train)

# Make predictions on the test set

y_pred = lin_reg.predict(X_test)

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")Outputs:

Mean Squared Error: 0.9607502675102089And there you have it—a basic linear regression model implemented using scikit-learn!

Random Forest with Code Examples

Random Forest is an ensemble learning method that operates by constructing multiple decision trees during training and outputs the mean prediction of individual trees for regression tasks. Random Forest is excellent for handling overfitting and provides a more robust model compared to a single decision tree.

To implement a Random Forest model, the first steps—installing scikit-learn and importing the required modules—are the same as with Linear Regression. However, instead of LinearRegression, we'll import RandomForestRegressor:

from sklearn.ensemble import RandomForestRegressorThe rest of the implementation follows a similar pattern:

# Create a Random Forest Regressor model and fit it to the training data

rf_reg = RandomForestRegressor(n_estimators=100)

rf_reg.fit(X_train, y_train.ravel())

# Make predictions on the test set

y_pred_rf = rf_reg.predict(X_test)

# Evaluate the model

mse_rf = mean_squared_error(y_test, y_pred_rf)

print(f"Mean Squared Error: {mse_rf}")Outputs:

Mean Squared Error: 1.6839190661669807The n_estimators parameter specifies the number of trees in the forest; you can adjust this number based on your specific use case.

Support Vector Machines (SVM) with Code Examples

Support Vector Machines (SVM) are powerful algorithms used for both classification and regression. An SVM performs classification by finding the hyperplane that best divides a dataset into different classes. The same principle can be extended to regression tasks as well.

For SVMs, scikit-learn offers SVR (Support Vector Regression):

from sklearn.svm import SVRThe subsequent steps of data splitting, model creation, fitting, and evaluation closely resemble those we've seen before:

# Create a Support Vector Regression model and fit it to the training data

svr = SVR(kernel='linear')

svr.fit(X_train, y_train.ravel())

# Make predictions on the test set

y_pred_svr = svr.predict(X_test)

# Evaluate the model

mse_svr = mean_squared_error(y_test, y_pred_svr)

print(f"Mean Squared Error: {mse_svr}")Outputs:

Mean Squared Error: 0.9334358078983114Here, the kernel parameter specifies the type of SVM to be used, and it can be 'linear', 'poly', 'rbf', etc.

By understanding and implementing these algorithms, you'll gain valuable hands-on experience that complements the theoretical knowledge you've amassed. You'll also get a clearer picture of how each algorithm performs under various circumstances, equipping you with the insights needed to select the most appropriate model for your data.

Model Evaluation in scikit-learn

After you've selected a machine learning algorithm and fitted it to your training data, the next crucial step is to evaluate how well your model performs. In this section, we'll delve into various methods for model evaluation, particularly focusing on how they can be implemented using scikit-learn, a versatile Python library for machine learning.

Why Model Evaluation Matters

Before we get into the specifics, it's important to understand why model evaluation is a critical component in the machine learning pipeline. A model's performance isn't necessarily a straightforward measure. Different problems and objectives require different evaluation metrics. For instance, if you're working on a spam email classifier, you might be more concerned about false positives—where genuine emails are flagged as spam—than other types of errors. Conversely, in medical diagnoses, false negatives—where actual cases go undetected—can be more harmful. By employing the right evaluation metrics, we can tailor our models to the unique constraints and objectives of our problem.

Using Built-in Functions for Metrics

Scikit-learn makes it remarkably easy to compute various evaluation metrics. The library comes with built-in functions for calculating commonly used metrics like accuracy, precision, recall, and the F1-score for classification problems. For regression problems, scikit-learn provides utilities for calculating the Mean Absolute Error (MAE), Mean Squared Error (MSE), and the R-squared score.

For example, to calculate accuracy for a classification model, you could use the following snippet of code:

from sklearn.metrics import accuracy_score

y_true = [0, 1, 1, 1]

y_pred = [0, 0, 1, 1]

print(accuracy_score(y_true, y_pred))Outputs:

0.75Confusion Matrix

The confusion matrix is another invaluable tool for understanding how your classification model performs across different classes. It provides a tabular summary of the actual vs. predicted labels, helping you identify where the model is making mistakes. Scikit-learn provides the confusion_matrix function, which you can easily use to visualize this information.

from sklearn.metrics import confusion_matrix

print(confusion_matrix(y_true, y_pred))Outputs:

[[1 0]

[1 2]]ROC and AUC

The Receiver Operating Characteristic (ROC) curve and the Area Under the Curve (AUC) are excellent tools for evaluating the trade-off between the true positive rate and false positive rate. These metrics are especially useful when dealing with imbalanced datasets. With scikit-learn, plotting an ROC curve or calculating AUC is straightforward. The library offers the roc_curve and roc_auc_score functions for these tasks.

Cross-validation for Robustness

While metrics like accuracy and precision give you a snapshot of your model's performance, they might be overly optimistic or pessimistic if based on a single train-test split. Cross-validation techniques, such as k-fold cross-validation, offer a more robust evaluation by partitioning the original training data set into 'k' subsets and training the model k times, each time with a different subset held out as the test set. Scikit-learn provides the cross_val_score function, allowing you to easily implement this technique.

from sklearn.model_selection import cross_val_score

scores = cross_val_score(your_model, X, y, cv=5)Evaluating a machine learning model is not a one-size-fits-all approach but rather a nuanced process tailored to the specific problem you're tackling. Scikit-learn offers a rich set of tools to make this process as straightforward as possible. By leveraging these tools, you can fine-tune your model effectively, understand its limitations, and ultimately, make well-informed decisions about its deployment in real-world applications.

Conclusion

This week's post aimed to bridge the gap between theory and practice by diving into the hands-on implementation of supervised learning algorithms using scikit-learn. We covered the essential steps from setting up the environment and preprocessing data to training and evaluating different types of models. The goal was to empower you with the practical knowledge necessary to turn the foundational concepts discussed in previous weeks into actionable insights. As we continue our journey into the fascinating world of machine learning, the next week will focus on fine-tuning your models through hyperparameter optimization. By understanding and applying these techniques, you'll not only improve your model's performance but also gain a deeper appreciation for the intricacies involved in creating robust, reliable machine learning solutions.

References

https://scikit-learn.org/stable/modules/classes.html