Architects of Imagination: An Introduction to Generative Adversarial Networks

Discover the Foundations and Evolution of GANs in AI's Revolutionary Path

Introduction: Understanding Generative Adversarial Networks

Continuing our exploration into Generative AI, this week we focus exclusively on Generative Adversarial Networks (GANs). Introduced by Ian Goodfellow and his team in 2014, GANs represent a groundbreaking approach in the world of generative models, fundamentally different from their counterparts.

We will cover the dual-structure of GANs, comprising the Generator and the Discriminator, examining how they work together in an adversarial manner. This exploration will also cover the challenges in training GANs, such as mode collapse and non-convergence, and the architectural nuances that make these networks unique.

Additionally, we'll explore the evolution of GANs, touching upon various significant variants like DCGANs and StyleGANs, each bringing advancements and specialized applications.

Core Concepts and Architecture of GANs

GANs stand as one of the most fascinating breakthroughs in the field of artificial intelligence and machine learning. They represent a class of AI algorithms used in unsupervised machine learning, implemented by a system of two neural networks contesting with each other in a zero-sum game framework. Since their introduction, GANs have seen widespread popularity due to their ability to generate incredibly realistic images, music, voice, and text.

Components of GANs

Generator

The Generator in a GAN is a neural network that learns to create data resembling the input data. Its primary goal is to produce data (like images) that are indistinguishable from real data to the Discriminator. It can be thought of as a counterfeit artist in the system, trying to create fake artworks.

Discriminator

The Discriminator, another neural network, is the adversary in the GAN setup. It learns to distinguish the generator's fake data from real data. Acting as a critic, it provides feedback to the Generator about the differences between the real and generated data.

Adversarial Training Process

The training process of GANs involves an iterative, competitive game where the Generator tries to maximize the probability of the Discriminator making a mistake, and the Discriminator tries to minimize this probability. This process can be described as follows:

Initially, the Generator produces a random output, which is likely to be easily distinguishable from the real dataset.

The Discriminator evaluates both the real data and the generated data, learning to distinguish between the two.

As training progresses, the Generator improves its output based on feedback from the Discriminator, gradually producing data that is more similar to the real data.

The Discriminator, in turn, improves its ability to differentiate real from fake.

This training process continues until the Generator produces data so convincingly real that the Discriminator can no longer distinguish fake from real, reaching a state known as Nash Equilibrium.

The crux of the GAN architecture lies in its mathematical formulations, which define the optimization problem both the generator and discriminator aim to solve. At its core, the GAN setup can be described as a minimax game represented by the following objective function V(D, G):

Here, E denotes the expectation, p(x) is the data distribution, p(z) is the generator's input noise distribution, D(x) is the discriminator's estimate of the probability that x is real, and G(z) is the data generated by the generator.

Loss Functions in GANs

The choice of loss function plays a critical role in training GANs. One common choice is the Binary Cross-Entropy Loss, which measures the distance between the distribution of the data generated by the Generator and the distribution of the real data. This loss function is conducive for the Discriminator to effectively learn the difference between real and fake data.

The mathematical formulation of the BCE loss function is:

where N is the number of samples, y_i is the true label, and yhat_i is the predicted label.

Challenges in Training GANs

Training GANs is notoriously difficult. Some of the main challenges include:

Mode Collapse: When the Generator starts producing a limited variety of outputs.

Non-Convergence: The training may never converge; the Generator and Discriminator keep oscillating.

Vanishing Gradients: This occurs when the Discriminator gets too good, and the Generator's gradients disappear, halting its learning process.

Understanding these core concepts is crucial for appreciating the sophistication of GANs and their wide range of applications, which will be discussed in the next section of our post.

Variants of GANs

GANs have evolved significantly since their inception. Various researchers have proposed enhancements and variants, each addressing specific challenges and applications. Here, we explore some of the most influential variants.

1. DCGAN (Deep Convolutional GAN)

Overview: DCGAN, introduced by Radford et al., is a pivotal variant that integrates convolutional neural networks (CNNs) into GANs. This architecture is specifically known for stabilizing the training process and generating high-quality images.

Key Features: It uses convolutional and convolutional-transpose layers in the discriminator and generator, respectively. Batch normalization and LeakyReLU activation are also integral components.

Impact: DCGAN has been instrumental in the advancement of unsupervised learning and has set a precedent for the use of CNNs in GAN architectures.

Reference Paper: Radford, A., Metz, L., & Chintala, S. (2015). "Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks." arXiv:1511.06434.

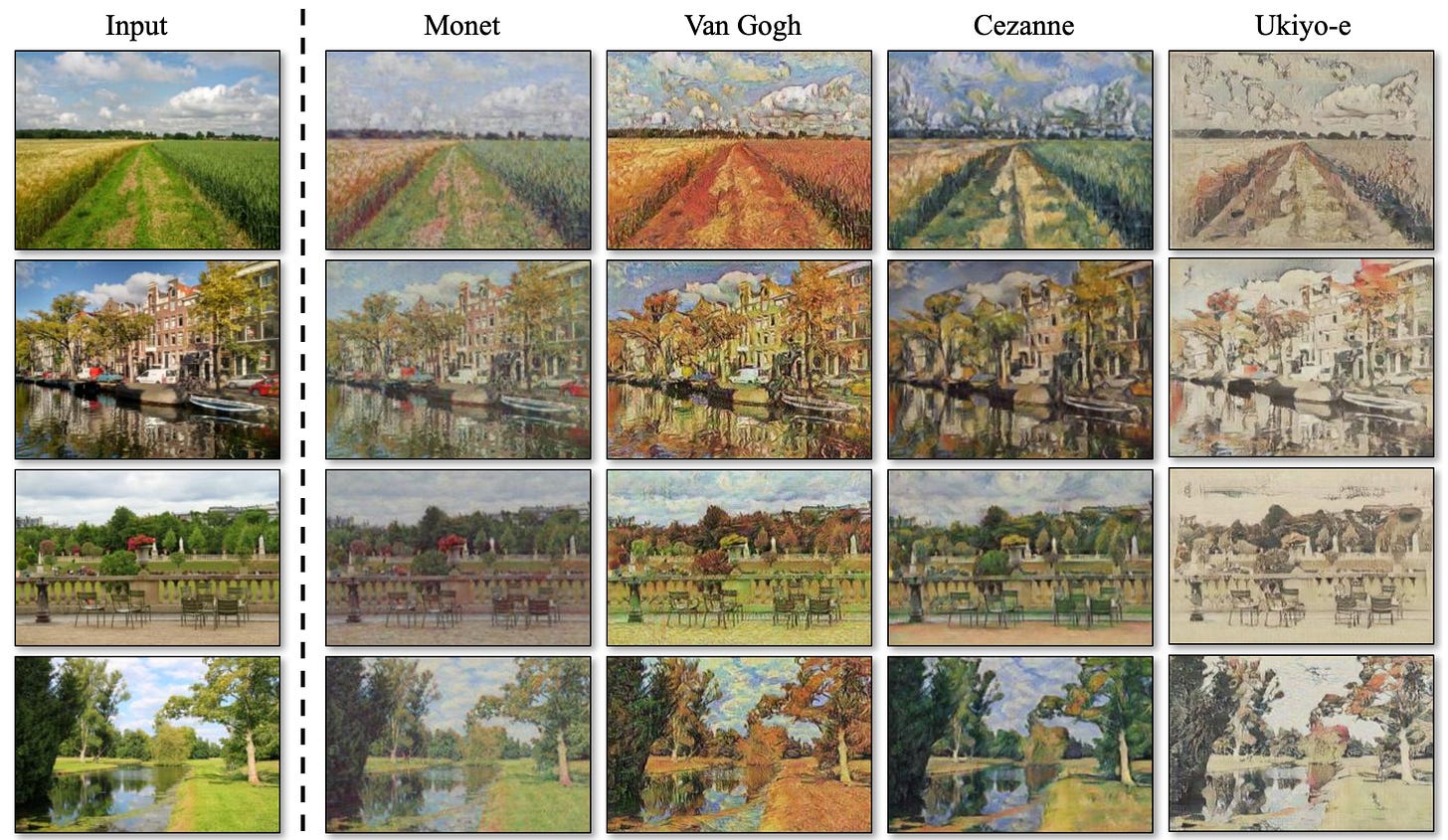

2. CycleGAN

Overview: CycleGAN, introduced by Zhu et al., is designed for image-to-image translation tasks without paired examples. This model is known for its ability to translate images from one domain to another (e.g., horses to zebras).

Key Features: It introduces cycle consistency loss to ensure that the input image can be reconstructed after a series of transformations. This helps in learning a mapping between two unpaired image domains.

Impact: CycleGAN has broad applications in style transfer, photo-realistic image generation, and more.

Reference Paper: Zhu, J. Y., Park, T., Isola, P., & Efros, A. A. (2017). "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks." arXiv:1703.10593.

3. StyleGAN

Overview: Developed by Karras et al. at NVIDIA, StyleGAN introduces an alternative generator architecture for generative adversarial networks, focusing on high-resolution and photorealistic image generation.

Key Features: It includes style transfer techniques into the generation process, allowing fine control over the generated image style at different scales. The architecture also incorporates adaptive instance normalization (AdaIN).

Impact: StyleGAN has been a breakthrough in generating highly realistic human faces and other complex images.

Reference Paper: Karras, T., Laine, S., & Aila, T. (2018). "A Style-Based Generator Architecture for Generative Adversarial Networks." arXiv:1812.04948.

These variants demonstrate the versatility and adaptability of GANs to different challenges in image generation and beyond. Each has contributed significantly to the field, paving the way for more innovative applications and research.

Conclusion

In our first week's journey into Generative Adversarial Networks (GANs), we've established a strong foundation. We began with an overview of GANs and their role in AI/ML, followed by their historical development. We examined the dynamic between the Generator and Discriminator, and their collaborative competition in adversarial training. We also addressed training challenges like mode collapse and non-convergence.

We explored various GAN architectures like DCGAN and StyleGAN, each offering unique solutions and opening new possibilities.

Next week, we'll delve into GANs' diverse applications, practical training strategies, and ethical aspects. Excitingly, we'll include a PyTorch tutorial for building a basic GAN, providing practical insights for hands-on experimentation.